The most popular term of the recent period is "vibe-coding". It promises users that they no longer need to know how to program, because all they need to do is tell the AI agent in natural language, as if "talking" to it, what they want, and the agent will produce a ready-made, functional program. Based on a short Google search, we find hundreds of success stories about people who, without programming knowledge, created a functional program in a few hours with one of the coding agents and are now selling it on the market. However, developers who have been working in the market for years or decades are watching this new trend with suspicion, many of whom fear for their livelihoods from the spread of coding agents.

I've always thought that it's worth giving an opinion about something if you know it, even if you don't go into the smallest details. It doesn't hurt if you know what you're talking about.

The first meeting

I've been using Visual Studio for development for a few years now, and like many people, I've been using GitHub to store my code. When GitHub launched Copilot in 2021, it was advertised as a programming partner who would understand the code I was writing and help me improve it. Sometime in 2022, I decided to give it a try. While the $10/month fee wasn't a huge deal, the real kicker was the 30-day free trial. I figured I really had nothing to lose.

Well, these 30 days flew by pretty quickly and I have to admit that Copilot completely impressed me. At first, I only used it to explain the functioning of codes written by others that I didn't fully understand. Then I pulled out old programs of mine that I was stuck on or that produced mysterious errors. And lo and behold, after analyzing the codes, it was able to give suggestions and new aspects (with specific code snippets) that I could move on with. But the biggest "bang" for me was when I experienced how well it could take over boring or not really liked tasks. It writes complete tests in such a short time that I can't even really think about what cases should be tested. Moreover, it also writes tests for cases that I wouldn't have even thought of. It generates the backbone of a project in a few minutes, and all I have to do is put the "meat" on the backbone. Plus, the auto-completion code works for me. I just start typing and it gives me complete suggestions for completion. If I like it, I just press a button and it inserts it. If I don't like it, I just keep typing and after a while I get a modified suggestion.

During the month of use, I somehow felt like I was enjoying programming again. It was as if I had two helpers by my side. A typing "slave" who writes the long, boring and eternally repetitive parts for me, and a mentor who can always push me further when I get stuck on something. At the end of the trial period, I decided not to cancel the subscription, because that's what it's worth to me. Of course, its operation was not flawless, it repeatedly suggested code that didn't work on its own because, for example, it was missing a helper function that it "forgot" to write. But after 1-2 refinements, there was always some result.

Of course, this version of Copilot wasn't a coding agent in the modern sense. It just made suggestions, but didn't automatically make any changes to the code. I wouldn't have let it, because I like to understand what I'm going to put into my program.

The agent enters the scene

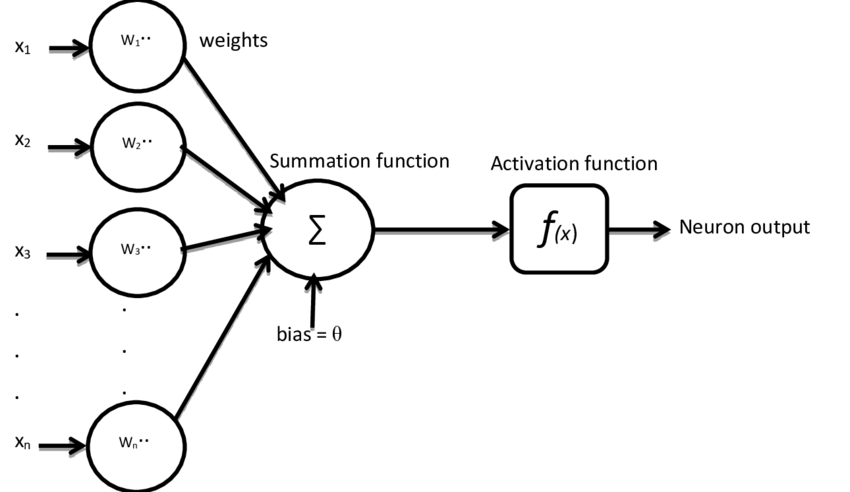

The Copilot agent mode was released sometime in early 2025. Claude Code was also released at that time, but there were already other players on the market, such as Cursor or Replit. The promise was that these agents would be able to create a complete program completely independently, based only on a text prompt, during which they would not only write the code, but also run it, correct errors and repeat the whole process until we end up with a flawlessly working program. I was quite skeptical about the matter, because although I had been dealing with certain areas of AI for some time, studying the operation of large language models, I somehow didn't believe in it all. Of course, video sharing sites were also quickly filled with materials that proved that this thing worked, but I always felt a little bit of a lack. Because in all such videos, they only showed the creation of relatively simple, hobby-like programs with 1-2 functions. But what about a slightly more serious application? For some reason, no one wanted to undertake the task of presenting this.

As time went by, they were constantly improving the Copilot agent mode. There were constant updates about what new features it had, what language models were available, etc. And I was getting more and more excited to try it out. I thought it must have outgrown its childhood problems by now, and the initial bugs had been fixed. Then in mid-August, I took a deep breath and gave it a try.

The test

My idea was to test the Copilot Agent mode by creating a not-too-complicated fitness app. Since there are several models to choose from, I decided on the Claude Sonnet 4, as it is considered one of the best models for coding, according to reviews.

I already knew that agents are powered by various large language models that require as much detailed context as possible in order to provide an accurate answer. That's why I started with a product requirements document (PRD). I also used Claude to compile it. I described the idea in a prompt consisting of 5-6 sentences and had it generate a PRD. I had the result in 1-2 minutes, but it still needed to be refined and specified. After 3-4 iterations, a material was finally created that could be used to start testing. Based on the document, the planned application is capable of the following functions:

- user management (logout/login, manage own data)

- recording physical data (weight, abdomen, thigh, chest, etc. measurements) daily

- management of pre-recorded exercises by the admin user and of exercises recorded by the user

- compiling workouts from exercises, recording workout data (duration, calories burned, etc.)

- a Blazor web frontend should be created first, but later it should be possible to manage a mobile application as well

- due to the previous point, creating an API interface with JWT authentication

- PostgreSQL database

- .NET Core environment, Entity Framework for database management

I created a new folder for the project, launched VS Code, attached the PRD to Copilot, and asked it to create the application based on it. Then I sat back and waited. After some thought, the machine started working. In the chat window, I could see how more and more files were created, organized into separate projects according to function. After the data models and API were completed, Copilot stopped and asked me to enter the database server access data. When I entered these, the work continued. I saw how Copilot created the database, compiled and ran the completed program, recognized and corrected any errors that arose. If it reached a part that was more critical for the runtime environment (for example, file system modification), it stopped and asked for permission to continue. Finally, in 15-20 minutes, the API interface was ready with a working database and data models. Hats off so far!

Then he asked the question: "Should I continue with creating the web frontend interface?" Of course, that's why we're here!

The Blazor frontend was ready in another 5-10 minutes. It seemed that the project could be compiled and run, but did it actually work? Copilot indicated that it was ready, so I should try the program. And here began a 3-hour ordeal.

Since my goal was to test the agent's standalone operation, I decided not to fix it in the code, but to just communicate the problems I encountered through the prompt.

So the program started and the interface appeared in the browser. It's a pretty minimal design, but that wasn't the focus, it was the functionality. I start a registration, enter the data, but nothing changes after sending. Checking the database, no new user was created. I describe the problem to the agent, he thinks about it, then tells me that he found and fixed the error. Another try, the same result. Problem description, thinking, fixing. Another try, it doesn't work. Since it was a bit suspicious, I checked the messages on the backend API console. It seemed that a request was going to the API, but it was immediately thrown back with a 404 error. So it managed to produce frontend code that tries to call a non-existent endpoint of the API it produced! A little confused, I write the problem to Copilot. Thinking, then he confirms approvingly that I'm really right. He fixes the error, and now I can register. According to the interface, I am logged in, although my username does not appear in the menu. OK, let's move on from this for now. I open the body data page and try to record data. It fails, because after sending, the loading icon only "spinning", but nothing happens. I restart the application and, learning from the previous ones, I pay attention to the messages in the console windows. Based on this, the token from the previous login is still there, so the application lets me log in. Another attempt to record data, after sending, a 401 error message is received on the console (Unauthorized). Interesting. Problem description to Copliot, thinking, throwing ideas, fixing, trying again. It doesn't work. Finally, after 3 hours of struggle, 15-20 fixes, I gave up because I got tired.

But why doesn't it work?

The problem didn't let me rest, so 3 days later I took the program out again with the aim of finding out why it wasn't working. The backend is relatively straightforward, a standard .NET Core web API. What was a little strange was that it didn't use the Microsoft Identity Framework to manage users, but otherwise everything seemed to be in order. The frontend components are also quite straightforward, HTML elements spiced up with some C# code. What struck me was that almost all the components are embedded in a component called AuthComponent . Its task is to check the user's logged in status and display either the given component or the login interface accordingly. For this, it uses a component called AuthService , which tries to read the JWT token from the browser's local storage and based on that, set the status, and read the username from the token. In addition, it uses a separate TokenService component for this.

I started the application as a test and activated the frontend project console. I was shocked to see that the AuthService method that checks the user's token is called at least 10 times before the interface is displayed. OK, I'll have to look into this carefully. I log in to the interface, but the username still doesn't appear in the menu and I can't record any data because the API keeps returning a 401 error. I can't log out either. On a sudden idea, I start Postman and try to access the API from there. The login works and I can also send data to the database based on the token I receive back, because I don't get a 401 error from there. Interesting.

When I looked at the frontend code closely, my eyes widened. The AuthComponent component was supposed to check the user's logged-in status using AuthService and display the embedded components accordingly. However, AuthService was injected separately into the embedded components and each of them checked the logged-in status separately. Why? Moreover, since these components tried to check the user's status at the same time when they started, AuthService tried to prevent the components from competing with each other to set the status with all sorts of tricky locking solutions. However, it would have been much simpler if AuthComponent had made the user's status available through a parameter. With some work, I "untangled" these anomalies, simplified the code in a few places and restarted the application. I was happy to see that AuthService is only called once. However, the API still returns connection attempts with a 401 error.

It took me about 2 hours to figure it out. I delved into how JWT tokens work, how .NET Core handles tokens, what data should be included in the token. I tried these, but to no avail. In the end, I copied the HTTP headers from the Postman requests one by one into the frontend code, but that didn't work either. I was about to give up when something caught my eye in the API project code. The CORS policy settings were suspicious, because the URL used when running the frontend project didn't seem to be there. I checked it and sure enough! The frontend wanted to connect from a URL that wasn't included in the CORS settings. When I fixed this, things started working. I don't understand how Copilot didn't notice this.

The only thing left unsolved was why the logout wasn't working. All I managed to find out was that the event handler in the NavMenu component wasn't called when the button was clicked. While I was looking for the reason for this, I noticed that there were a few lines of code within the component that would be better moved to the event handler that runs after the component is initialized. After I did this, I noticed that these lines of code weren't running either. So the component's initialization was stuck somewhere. After further investigation and reading the documentation, it turned out that the component's RenderMode parameter wasn't set correctly. After I fixed this, everything suddenly fell into place. The user's name appeared in the menu and the logout worked.

Then I found a few more interesting bugs. For example, I couldn't record my own gymnastics exercises in the database. As it turned out, the format of the data sent from the frontend didn't match the data model used in the backend. Or, for example, certain frontend interfaces were only half-finished. But I didn't bother with these anymore because I felt that this was enough of an experiment.

Conclusion

Coding agents can do a pretty good job when it comes to simple, well-defined tasks. But they are still a long way from being able to put together even a moderately complex program on their own. A detailed, well-defined context is important for solving tasks. For more complex programs consisting of several parts, however, it is worth breaking the task into smaller units, having them prepared one by one, in several iterations, and then adding the completed modules to the context and moving on to the next part.

I also think it is important to always include human verification in the process. This is because the large language models behind the agents were trained on publicly available codebases, which often contain non-optimized, test-only, or security-vulnerable code. Thus, the code generated by the agents will likely contain security bugs or suboptimal code, which can be a source of additional problems in a program released for production.

So, in my opinion, coding agents won't take away developers' jobs for a while, but they will fundamentally transform them. Junior developers will have a harder time entering the profession, as agents can take over the simpler, more automatable coding tasks they've been doing. More experienced developers will spend more time reviewing and fixing the code generated by agents.